This is the second blog in a two-part series. In part one, Secure Coding Best Practices, Matthew Butler covers the basics of the threat landscape and his best practices for developers writing secure code. In this second installment, he looks at architecture design choices and testing strategies. This material comes from his new book Exploiting Modern C++: Writing Secure Code for an Insecure World due out later this year.

Security is built in layers with the last layer being the code itself. In the first part of this series, we looked at best practices for secure software development. So what are some of the best practices for secure architecture design and testing?

All Testing Is Asymmetrical

In The Art of War, Sun Tzu said, “Every battle is won or lost before it is ever fought.” Building secure systems in never about luck. If anything, the luck will be on the other side. The Navy SEALs put it another way, “Luck is the residue of design.” Building secure systems is about intention. It’s about planning. It’s about design. And this also applies to testing.

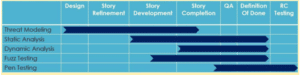

Testing for security involves a number of different testing techniques each focusing on a different aspect of security and each coming at different times in the development cycle. These include static analysis, dynamic analysis, fuzz testing, and penetration testing.

Testing Across the Software Development Life Cycle

Source: Matthew Butler

Static Analysis Tools

You probably already use static analysis tools as part of your build system. These tools look for code and API errors by parsing source code. Because they’re automated checks based on rules, they tend to produce a lot of false positives and can be easily defeated by simple variances in the code. In C++, for example, static analysis may look for the use of the function strcpy(). But the use of strncpy() (strcpy() limited to n characters) can be just as dangerous but is generally never caught. Static analysis tools work well for finding simple, fundamental API errors, like a failure to check returns, but can’t detect complex coding errors, environmental problems, or third-party library vulnerabilities.

Dynamic Analysis Tools

Dynamic analysis tools check for vulnerabilities in running software by adding hooks into the software to look at memory leaks, concurrency problems, and dangerous behavior. Dynamic analysis is dependent on having a strong set of test cases to exercise the software and are best started when you have fairly mature functionality. They really shine, though, during performance, stress, and scalability testing, which comes later in the development cycle.

Fuzz Testing

Fuzz testing is an extremely powerful, but often overlooked, testing approach that exposes failures in the situational awareness of your interfaces. These tools can be used for both class and function interfaces at the source-code level along with your internal IPC and external interfaces. They use genetic algorithms that interpret the responses from previous tests to create increasingly complex patterns of inputs. While fuzz testing can be a challenge to deploy, I highly recommend this testing to ensure the robustness of your interfaces and data validation code.

Penetration Testing

For most software engineers, penetration testing (or just pen testing) is a mystery. This type of testing uses the same techniques hackers use to exploit systems. And while pen testing does require a significant level of security knowledge, most developers are more than capable of learning the techniques and even excel at pen testing their software because of their intimate knowledge of the code base; they already know where the bodies are buried.

The chart above shows the different types of testing and the best points in your development cycle to use them to the greatest effect. With testing, there is no silver bullet. Static analysis is not a silver bullet. Functional testing is not a silver bullet. It takes a well-designed test program to catch the worst and most deadly vulnerabilities.

Secure Coding Starts with Secure Designs: Threat Modeling

Code is only as secure as the foundation it rests on. That means building a secure architecture first and making sure it’s secure before you start coding. Once the code is written and you’ve cut a release candidate, it’s generally too late to go back and fix an insecure architecture.

That’s where threat modeling comes in. Threat modeling is the process by which we identify the attack vectors and attack surfaces we talked about earlier. It helps you understand the nature of the threats and the nature of the individuals attacking your systems.

The good news is that you’ve been threat modeling all of your life. You started when your parents taught you to look both ways before crossing the street. You do it every time you drive, every time you’re in a strange city. Darkness, isolation, insecurity, vulnerability are all areas where we begin to intuitively model the threats around us. Modeling threats to our systems is no different.

Trust boundaries are an important part of threat modeling. Trust boundaries occur when data moves across areas with different levels of trust. Trust is inversely proportional to risk. For example, the Internet is high risk because it is low trust, while internal networks are lower risk because you trust them. The new normal, though, is a zero-trust environment in which your application always has to authenticate who it’s talking to even if it’s a web server on the same box.

There are six basic stages in threat modeling:

- Scope definition. At this stage you’re identifying the parts of your system that you want to threat model. If you carve off too broad of a swath, you’ll drown in the data. Too narrow and you’ll miss threats. Think of this as an iterative process where you start narrowly and expand outward.

- Model creation. Model creation involves creating a data flow diagram (DFD). The DFD shows how data flows through the system from producers to consumers. It serves as a reference design for your later work.

- Threat identification. This is the longest and often hardest part of threat modeling. It begins by identifying trust boundaries and the exposed attack surfaces. As each attack surface is established, you then identify what types of attack vectors can be deployed against those surfaces. Along the way you’ll find data that you’re holding that you don’t need, where you’re allowing access that you shouldn’t and feature functionality that is now obsolete.

- Threat classification. As each new threat is discovered it is classified by the type and amount of data that is exposed and the ease with which it’s compromised. Fibonacci numbers are a good choice for classifying threats, the higher the number the worse the vulnerability.

- Mitigation planning. Not all vulnerabilities can be addressed in the next release. At this point you’ll need to prioritize the vulnerabilities, putting the low severity ones into your tech debt, the moderate ones into your backlog, and the high severity ones on your radar for today.

- Validation. This stage has two parts: making sure that you fixed the underlying vulnerability and making sure that you didn’t create any other vulnerabilities. Remember the lesson from Dirty COW.

Ruthlessness Is a Virtue

We often don’t think in terms of ruthlessness, but the other side does. Most code reviews focus on line lengths, naming, and algorithms, but there is a certain need for ruthlessness in looking for ways to break code. Will the interfaces accept data the code isn’t expecting? What happens when the code encounters an exception? The goal of a good security-driven code review is not to embarrass our colleagues, it’s to find those hidden vulnerabilities before the other side does.

Here are some of the things I look for in code reviews:

- Memory. I look at every instance where you touch, copy, or move memory because you’re likely to get it wrong. This includes using pointers, indexes, and iterators.

- Data validation. Are you sanitizing the data your code is working on? Anytime you don’t validate data or verify users, you have a vulnerability. DoS attacks are nothing more than you accepting bad data from an untrusted source and trying to process it.

- Internal interfaces. Are your IPC interfaces open? What can I send to them? Do they require authentication? Are you using command line interfaces (CLIs)? CLIs are horribly insecure because they act as internal interfaces exposed to the outside world creating access points into your internal code. CLIs not only give you a nice menu of commands when you run them, they don’t require authentication. Anyone logged in can run them.

- Complexity. Complexity is the enemy. Period. Are you using established, standard algorithms or rolling your own? Is your code easy to understand, clean? Is it as simple as you can make it? Forget all the programming tricks and the “advanced” syntax. Write clean, simple code on top of clean, simple designs. Ignore the developers who love to show off by writing complicated code. They’re just cutting their own throats and yours at the same time.

- Data spills. Are you saying too much in your log entries? In your error messages? Remember that the other side reads error messages and logs too. Anytime you write privileged information into a log or error message, you are providing hackers with an opportunity to learn something about your system. If you have taken the time to protect privileged information, don’t undo your protection by writing it into a log.

A couple more points on code reviews. Review the feature not just the changes. I have approved code changes that created other vulnerabilities because I didn’t look at the complete context of the change. Linus Torvalds has done the same thing so it’s OK. And this applies even more so to legacy code. That’s the code no one wants to touch and yet it often hides the worst vulnerabilities. Don’t be afraid to dig into it and remember, today’s code becomes tomorrow’s legacy code, so if you miss something now, you’ll pay for it later.

Usually, painfully so.

You’re Only as Strong as Your Weakest Third-Party Library

Open source libraries introduce significant risk into your codebase. With the explosion of third-party libraries in almost every language, we’re building systems with code we didn’t write, that hasn’t been code reviewed for security, and whose source code is completely exposed for hackers to exploit. There are numerous examples of libraries being subverted to do everything from cryptocurrency mining to stealing SSL credentials, all without the users of the libraries knowing about it. Their defects become your defects; their vulnerabilities become your vulnerabilities.

But there are ways to mitigate the risks when using open source code. First, review the code yourself to verify that it is acting as you expect it to. Put the libraries into a test lab and look at what they do. If, for example, you’re using a library that doesn’t have anything to do with networking but it’s opening sockets, you need to ask why. You can also mitigate risks in third-party libraries by building security wrappers that sanitize the data being passed to them and to deal with exceptions coming from the library.

Keep in mind that I’m not saying that you should stop using third-party libraries. But you do assume the risk they represent and need to protect your systems from them. Trust, but verify.

Building Secure Software

Building secure software is like any other human endeavor. It takes knowledge, persistence, and intention. Our greatest weakness isn’t that we can’t do it. It’s that we think there is this divide between the inside and outside, the safe and the unsafe. The reality is that there are no safe spaces. We live in a zero-trust world where the last line of defense against tomorrow’s vulnerabilities is the code we design, write, test, and deploy today.

If you’re interested in learning more about developing secure systems, check out part one of this series, Secure Coding Best Practices.